#1

#1

Almost all image processing and 3D rendering and shading algorithims written today assumme the image is in Linear Floating Point. For example most renderers assumme that a projected light, if spread across an area twice as large as before, will result in a image value that is half what it was originally, because in the real world half as many photons reach each portion of the surface.

Unfortunately almost all software out there today assumme images are stored in files by multiplying Linear Floating Point by some constant (usually 255). In reality these images when displayed on a screen result in luminances that match the sRGB curve. A number half as big as another number is less than 1/4 as bright, and not 1/2 as bright as the algorithim expected.

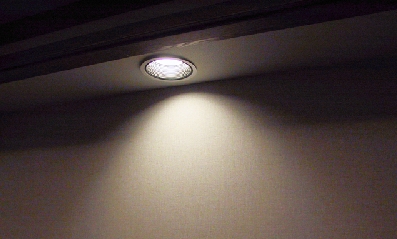

Here is a photograph of a real scene, showing some overlapping

lights.

#1

#1

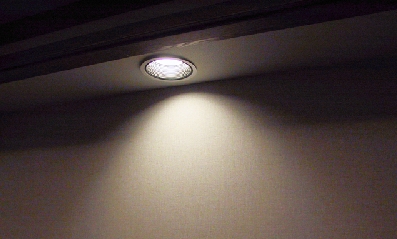

Here is a simulation using rather simple but physically accurate

light calculations. The floating point output of the shading

calculations were converted to sRGB so that the resulting display has

the same luminance as the shading algorithim thought it was

making. Notice that this did pretty good and without any manual

adjustment of the shaders:

#2

#2

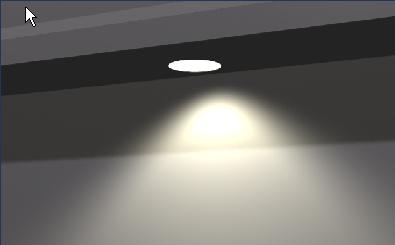

Here is what most renderers do when trying to simulate this

scene. They calculate the light quite accurately in linear floating

point, but then assumme that they can store the image by multiplying

by 255 to get the bytes to go into the file:

#3

#3

Most users of rendering software today see results like #3 above, and conclude that lighting is much more complex and non-scientific than they thought. They then throw in fake lights (like global fill), or paint objects strange colors, or write elaborate shaders that do not match the laws of physics, in order to compensate for this wrong conversion and produce the desired output. This is all tedious and never results in a perfect image, and could be solved by having renderers correctly convert to sRGB from linear.

(Photos and renderings by Dan Lemmon)

Maybe these problems will be addressed soon?