sRGB is a standard to encode

luminance into 8 bits (or into any integer space). This standard was

developed by Hewlett-Packard and MicroSoft, and has been endorsed by

the W3C, EXIF, Intel, Pantone, Corel, and many other industry

players. It is also well accepted by Open Source software such as the

Gimp and PNG file formats.

sRGB is a standard to encode

luminance into 8 bits (or into any integer space). This standard was

developed by Hewlett-Packard and MicroSoft, and has been endorsed by

the W3C, EXIF, Intel, Pantone, Corel, and many other industry

players. It is also well accepted by Open Source software such as the

Gimp and PNG file formats.

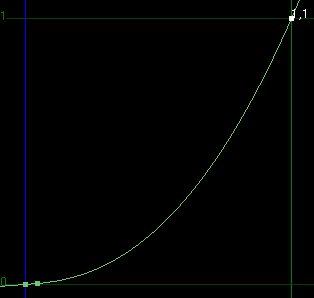

What the standard does is define the luminance of a value stored in an image file. This is a relative luminance, where 1.0 means "the brightest color the display can do". After scaling a number from a file into the 0-1 range, sRGB defines the luminance by the function:

v < .04045 ? v / 12.92 : pow((v+.055)/1.055, 2.4)

It is important to know that sRGB is designed to match what current monitors do (they actually tend to have a zero slope at the bottom, sRGB defines the linear section at the bottom to avoid difficulties with converting to/from it). For this reason your typical 8-bit file found on the internet or in your digitized photos can be assummed to be in sRGB encoding.

(sRGB also defines the color, but my work is not concerned with this)